In this blog from our Understanding Evidence series, Martin Burton explores absence of evidence…

Page last reviewed: 04 April 2023.

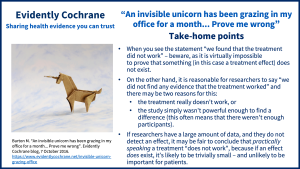

Take-home points

Yes – it’s true. I have been hosting the beast unwittingly. Don’t believe me? Prove it! Come and prove that the invisible unicorn does not exist. Maybe when you come you won’t find any evidence that the unicorn has been here. In fact, I suspect you might not. But – I will counter – you just aren’t looking properly, or carefully enough, or for the right sort of evidence. It has been described as ‘a principle of folk logic that one can’t prove a negative’. Proving something doesn’t exist is difficult: perhaps impossible.

Imagine this trial…

Imagine a trial to examine the effectiveness of a new drug – we’ll call it unicornal. And let’s imagine that the drug – allegedly – leads to improved vision: an ability to see clearly in a darkened room. Without the drug, only about 5% of normal people (1 in 20) can see in these circumstances. To see if the drug works, we take 40 people and randomly allocate them to 2 groups with 20 people in each. One group gets unicornal tablets, the other a dummy, placebo tablet. Nobody knows – neither the people taking the tablets nor the people conducting the experiment – whether or not they are taking unicornal or placebo. We then measure the ability of people to see in the dark. In the group receiving unicornal, just one person can do so and similarly in the placebo group one person (as you’d expect).

What can we conclude?

Which of the following is correct?

- This study shows that unicornal does not improve vision in the dark (or to put it another way – there is evidence that there is no effect of unicornal).

- This study provides no evidence that unicornal is effective in improving vision in the dark (or – there is an absence of evidence that unicornal is effective).

It can be hard to see the difference between these statements. But one – the first – is about “evidence of absence” and the second is about “absence of evidence”. The first is saying in effect “here is some evidence that allows us to conclude that there is no effect of unicornal”. Whilst the second says “we haven’t found any evidence that unicornal works. Maybe it does, maybe it doesn’t – we just don’t know”.

Absence of evidence

There is a key aphorism: the “absence of evidence is not evidence of absence”. This should be taken to heart by everyone interested in understanding evidence. Where does the unicorn come in? Well, there is (you will find when you arrive) an absence of evidence that my unicorn exists. This is not the same as there being evidence that she doesn’t exist. You simply can’t prove that.

In the same way, the evidence from my small study of 40 patients can’t be taken as proving that unicornal does not work (paragraph 1. above). All we can say is that there is no evidence from this study that it does work (paragraph 2).

Let’s dig deeper

But perhaps we should dig a little deeper. Some of you will already be saying “the study was too small, you were never going to show a difference with so few patients”. Well, maybe or maybe not. If unicornal had had a very dramatic effect, tripling or quadrupling the number of people who could see in the dark, we may well have seen that even in our small study. In other words when the ‘effect size’ is large or dramatic we may not need a big study.

On the other hand, let’s imagine that the “truth” – if only we could flush it out – is that unicornal does improve the proportion of people who can see in the dark, but only marginally. Imagine a group of 10,000 normal people. 5% of them – 500 in all – would be excepted to be able to see in the dark. If the drug helps another 50 to see (an increase from 5% to 5.5%) the chances of working that out on the basis of a small study of 40 patients is nigh on impossible. We “missed out” on detecting that in our study. There really was a benefit of unicornal and a difference between those taking the drug and those not, but we simply did not find it.

Two possibilities

So, when we find no difference between two groups in a trial like this, there are two possibilities;

- The possibility that there is truly no difference between the groups and however big a trial we do we will never find one. Unicornal has absolutely no effect on vision in the dark

- The possibility that there is a difference, but that difference is relatively small and we have “missed it” because our study wasn’t powerful enough to find it. By saying not ‘powerful’ enough we often mean that it didn’t include enough people.

What is important?

There is an important issue here. How big a difference between two groups is important to us? Would it be important to us as a group of researchers or patients, if unicornal leads to an improvement in vision in the dark in 1 in 50 of the people taking it? Well, that depends on a number of things, not least the “downside” of taking the drug (its side effects and costs). Would our answer be the same if it only produces an improvement in 1 person in 10,000 taking it? This discussion is all about “minimally important effect sizes” and that is another topic for another day.

A warning…

Coming back to absences. Whenever you see the statement “we found that the treatment did not work” – beware. The writers are claiming evidence of an absent effect. They are likely to be wrong. On the other hand, applaud those who say – perfectly reasonably – “we did not find any evidence that the treatment worked” and remind yourself that there may be two reasons for this: that the treatment really doesn’t work, or that the study simply wasn’t powerful enough to find a difference.

What can we say with confidence?

Enough of unicorns and the nebulous, allow me to make a very practical final point.

Imagine that you have a study with a million people in each group and still there is no difference between the two. Surely in a case like this, we can say there is no difference and that this treatment is “not effective”? Faced with a large body of evidence such as this, we can at least say with confidence that if there is a difference it is very, very small and of no practical significance. And this is what we do when we have a large body of evidence, large numbers of patients and no detectable difference. We can – having done the appropriate statistical tests – conclude that practically speaking the treatment “does not work” because any effect size is trivially small and further studies to look at the treatment’s effectiveness are unnecessary; we are as certain about this as we need or want to be. This is a conclusion that we come to not uncommonly at the conclusion of a large systematic review or randomized controlled trial.

Further reading

Alderson P. Absence of evidence is not evidence of absence. BMJ 2004;328(7438):476-7. Available from: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC351831/pdf/bmj32800476.pdf

Join in the conversation on Twitter @CochraneUK @MartinJBurton #understandingevidence.

[…] Martin Burton (October 7, 2016). “An invisible unicorn has been grazing in my office for a month… Prove me wrong”. Evidently […]

“The writers are claiming evidence of an absent effect. They are likely to be wrong. ” Are you say they are wrong that the effect is absent, or that they are wrong to say they have evidence that the effect is absent? For the latter why is it ‘likely’? If it’s a failure of logic it’s just wrong and ‘likely’ doesn’t come into it. If it’s a matter of fact, how do we assess the likelihood other than through evidence, which is what we’re talking about already. Other than the evidence before us, what other means do we have to set against the evidence?

If you take the universe of all the things that could be cause and effect, most of them aren’t. What I had for breakfast doesn’t cause the phases of the moon etc, etc.

Lovely article about the absence of effect. Very glad the unicorn proved too great a carrot for me to pass by.

Interesting and thought provoking article which definitely provided a lively debate on a Friday afternoon

Read it with great pleasure!