We are bombarded with information and advice on our health. Lynda Ware, former GP and Senior Fellow in General Practice at Cochrane UK, gives some guidance on how to assess whether what we read is evidence-based and trustworthy.

Page last checked 26 June 2023.

Take-home points

Knowing how to tell fact from fake news has never been more important than during this time of coronavirus pandemic. Everywhere we look, we are bombarded with information and advice on our health. How can we pick out what is trustworthy and evidence-based?

At Cochrane we are dedicated to providing trusted evidence to help make decisions about medical treatments and healthy living. Systematic reviewers analyse dataData is the information collected through research. from trials conducted worldwide to answer important clinical questions. Cochrane does not give health advice or make recommendations but supplies a careful synthesis of the evidence available. This process is without any commercial or political influence. Cochrane’s strapline is:

Trusted evidence. Informed decisions. Better health.

There are a few guiding principles which can help us decide what to believe and what to question

Here are some examples:

- Anecdotes are unreliable evidence

- Newer is not necessarily better

- More is not necessarily better

- Earlier is not necessarily better

- Treatments can harm

- AssociationA relationship between two characteristics, such that as one changes, the other changes in a predictable way. For example, statistics demonstrate that there is an association between smoking and lung cancer. In a positive association, one quantity increases as the other one increases (as with smoking and lung cancer). In a negative association, an increase in one quantity corresponds to a decrease in the other. Association does not necessarily mean that one thing causes the other. is not the same as causation

Let’s take a deeper look at these points…

Anecdotes are unreliable evidence

“‘Miracle’ malaria drug touted by Trump saved us from coronavirus, claim Americans: Infected patients across US say hydroxychloroquine helped them recover – despite experts’ insistence evidence of its effectivenessThe ability of an intervention (for example a drug, surgery, or exercise) to produce a desired effect, such as reduce symptoms. is slim.”

This headline is from the Mail Online. So far there is no evidence that these drugs are effective and safe in treating coronavirus. Indeed, a man in Arizona died after taking the antimalarial drug chloroquine in the mistaken belief that it would cure Covid-19. Anecdotes and personal experiences are not based on well-conducted clinical trialsClinical trials are research studies involving people who use healthcare services. They often compare a new or different treatment with the best treatment currently available. This is to test whether the new or different treatment is safe, effective and any better than what is currently used. No matter how promising a new treatment may appear during tests in a laboratory, it must go through clinical trials before its benefits and risks can really be known.. When reading about the latest medical news and health advice in the media, it’s important to ask: ‘is there reliable evidence behind the claims?’ You can find a list of trustworthy, evidence-based websites giving information on health issues at the end of this blog. This includes iHealthFacts, a website recently launched by the Health Research Board Trials Methodology Research Network in collaboration with Evidence Synthesis Ireland and Cochrane Ireland. It is a resource where the public can quickly and easily submit their questions, in order to check the reliability of health claims circulated by social media.

Newer is not necessarily better

The newest (and often most expensive) treatmentSomething done with the aim of improving health or relieving suffering. For example, medicines, surgery, psychological and physical therapies, diet and exercise changes. is not always better than older, tried-and-tested interventions. For example, in 2000 rosiglitazone was licensed as a new medication for Type 2 Diabetes and found to be extremely effective at lowering blood sugar levels. However, in 2010 it was withdrawn due to its link to an increased riskA way of expressing the chance of an event taking place, expressed as the number of events divided by the total number of observations or people. It can be stated as ‘the chance of falling were one in four’ (1/4 = 25%). This measure is good no matter the incidence of events i.e. common or infrequent. of heart attack and stroke. Adverse effects of new drugs may not become apparent for many years after their introduction and ongoing surveillance is vitally important. For more detail, read this blog, which takes a critical look at the value of new and expensive therapies for medical conditions.

More is not necessarily better

If a small dose is helpful then more must be better? Not necessarily so… A very small dose of aspirin (1/4 to 1/2 tablet) is often prescribed to help prevent heart attacks and strokes. Higher doses are effective pain relievers but do not give cardiovascular protection and are associated with an increased risk of stomach ulcers and bleeding.

Earlier is not necessarily better

Detecting a disease early is not always beneficial to our health and wellbeing. Screening programmes have to carefully weigh up the advantages of early detection and treatment against unnecessary anxiety, side-effects of treatment and the possibility that long term outcomesOutcomes are measures of health (for example quality of life, pain, blood sugar levels) that can be used to assess the effectiveness and safety of a treatment or other intervention (for example a drug, surgery, or exercise). In research, the outcomes considered most important are ‘primary outcomes’ and those considered less important are ‘secondary outcomes’. may not be improved.

Treatments can harm

A tragic example of this is the drug thalidomide, given to treat morning sickness in early pregnancy in the 1960s, which led to disability and death of thousands of newborn babies.

Association is not the same as causation

“Good sleep linked to lower risk of heart attack and stroke”

In an article in December 2019, The Times reported that a “restful night ‘cuts risk’ of heart attack”. This headline was based on a studyAn investigation of a healthcare problem. There are different types of studies used to answer research questions, for example randomised controlled trials or observational studies., which observed more than 380,000 healthy participants over 8.5 years. The researchers assessed the sleep patterns of the people taking part and documented the development of cardiovascular disease. They concluded that those with the healthiest sleep are 35% less likely to have a heart attack or stroke. However, one of the limitations of the study is that it is an observational studyA study in which the researchers look at what happens over time to groups of people. They study changes or differences in one characteristic (e.g. whether or not people smoke) in relation to changes or differences in another characteristic (e.g. whether or not they get cancer). and therefore cannot prove cause and effect; it can highlight an association but there may be other important factors involved.

Some more things to bear in mind when looking at the latest reports of clinical trials:

- What kinds of trials were involved?

- How certain is the evidence?

- Are the participants in the trial similar to me?

- Is the research evidence relevant to me and my circumstances?

- What is the real risk to me?

Expanding on a couple of these points…

What kinds of trial were involved?

The very best way to conduct a clinical trial is by randomizedRandomization is the process of randomly dividing into groups the people taking part in a trial. One group (the intervention group) will be given the intervention being tested (for example a drug, surgery, or exercise) and compared with a group which does not receive the intervention (the control group). control trial (RCT), which aims to remove, where possible, anything that might biasAny factor, recognised or not, that distorts the findings of a study. For example, reporting bias is a type of bias that occurs when researchers, or others (e.g. drug companies) choose not report or publish the results of a study, or do not provide full information about a study. the results. An observational or cohort study observes a group of people over time and can suggest correlations, as in the example above of sleep and heart disease, but not prove a causal link. Systematic reviewsIn systematic reviews we search for and summarize studies that answer a specific research question (e.g. is paracetamol effective and safe for treating back pain?). The studies are identified, assessed, and summarized by using a systematic and predefined approach. They inform recommendations for healthcare and research., such as those conducted by Cochrane, collect and analyse data from multiple clinical trials.

What is the risk to me?

When we read media headlines, we are often told about how our risk of benefit or harm can be changed by a treatment or alteration in our lifestyle.

“Processed meats pose the same cancer risk as smoking and asbestos, reports say.”

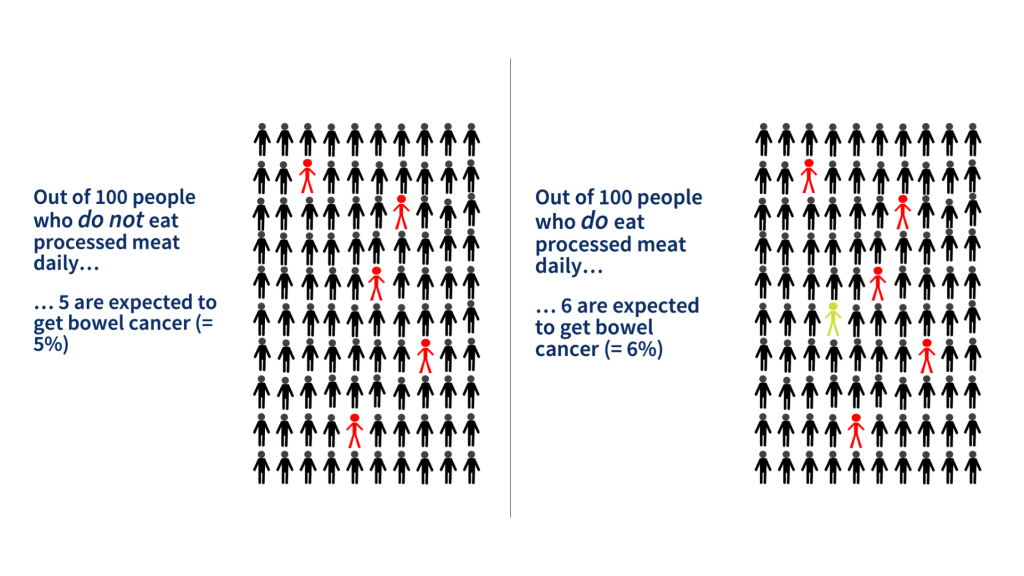

This is a headline from The Guardian in 2015, following a WHO press statement concerning the increased risk of lower bowel cancer associated with eating processed meats. It wrongly suggests that eating foods such as bacon and sausages are “a cancer risk on the scale of smoking and asbestos”. What the WHO stated was that there was sufficient evidence to conclude that processed meats are Class 1 carcinogens (substances that are definitely associated with the development of cancer). Cigarettes and asbestos are also Class 1 carcinogens. The statement made no comment on comparative risks and smoking is a considerably riskier activity than eating a bacon sandwich.

The WHO also stated that eating 50g of processed meat increased the risk of developing colorectal cancer by around 20%. This sounds quite alarming but it only tells us how the risk has changed (this is the relative risk), not about how it affects us personally. We need to know our baseline risk so that we can work out how much it will change. In fact, our lifetime risk of developing colorectal cancer is 5% (i.e. five people in every 100 will get it). This is called our absolute risk. By eating the equivalent of two rashers of bacon per day this goes up by a fifth (that’s the 20%) to 6% (i.e. six people in every 100) – an increase of one extra person per 100 over the course of a lifetime. Not quite so alarming. With this information, i.e. knowing the change in the absolute risk, we are able to make an informed decision about whether we want to alter our eating habits.

When reading health news headlines, be aware that quoted changes in risk often reflect relative risk and are meaningless unless we are also told our absolute risk as well.

Remember headlines are designed to catch our attention…

Be sceptical! Headlines can be misleading and are sometimes plain wrong. In their defence, many journalists do strive to report accurately. However, like us they can be persuaded by compelling numbers. And, of course, headlines sell papers.

Reliable sources of information

It’s hard knowing what to believe from all the health advice we encounter every day. Here are some websites that can be trusted to give measured, evidence-based information:

- cochranelibrary.com

- cochrane.org

- evidentlycochrane.net

- students4bestevidence.net

- nice.org.uk

- ihealthfacts.ie

- askforevidence.org

- healthtalk.org

- fullfact.org

- nhs.co.uk

- www.who.int – World Health Organization

- http://www.testingtreatments.org

- senseaboutscience.org

- patient.info

- WHO Coronavirus myth busters

Above all, stay safe, stay well.

Join in the conversation on Twitter with @lynda_ware and @CochraneUK or leave a comment on the blog. Please note, we will not publish comments that link to commercial sites or appear to endorse commercial products.

Lynda Ware has nothing to disclose.

Great blog!